It is an increasingly common practice in the medical device industry to integrate third-party smart devices such as phones and tablets into medical device architectures and operational models.

After examining several such systems, Harbor Labs has identified a broad set of unique cybersecurity risks in smart devices that may impact patient safety and privacy. To understand these risks, let us examine the design and function of a fictitious insulin delivery system called FakePump.

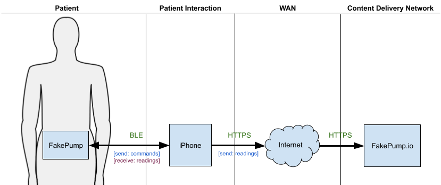

FakePump is a medical device that can read a patient’s blood glucose levels and in response, administer the appropriate insulin therapy. The patient wears the device at all times. FakePump has a Bluetooth Low Energy (BLE) wireless interface that can pair to the patient’s iPhone and send and receive data. The patient must install the FakePump iOS App on their iPhone to enable device communication.

Data sent from FakePump includes periodic blood glucose levels and events such as an insulin injection. Data received from the FakePump app contains commands such as inject N-units of insulin. The app forwards all received data to a content delivery network (CDN) managed by the device manufacturer called FakePump.io. The CDN stores patient data and exposes APIs for mobile and website applications to access and manipulate collected data.

A physician, caretaker, or patient may access this data via the application or CDN.

In the FakePump architecture, the iPhone is untrusted. The manufacturer has limited or no control over the smart device, while the patient has complete control. The manufacturer can minimally influence a patient’s iPhone’s hardware and software capabilities by setting a minimum iOS version supported by its iOS app. For example, the FakePump manufacturer may set the minimum iOS version to 11. This setting guarantees that Secure Enclave is available, and SFSafariViewController enforces cookie space separation between Safari and iOS apps. These features provide secure storage for the iOS app’s private crypto keys and restrict access to web-based access tokens.

However, this constitutes minimal control. A patient may jailbreak his or her device, thereby ignoring iOS app requirements. A jailbroken device may enable an attacker to access all app data previously subject to application sandboxing. More troubling, an attacker may access the FakePump iOS application binary and reverse engineer it.

These risks are inherent to any architecture that requires a smart device, including both iOS and Android devices. A reverse-engineered FakePump iOS app is a serious risk, as the effort would reveal all implementation and protocol-level app defects. These defects include hardcoded credentials, secrets, keys, improper or lack of input sanitization, rolling credential practices, insecure fallback mechanisms for data transmission, improper use of system libraries and functionality and outdated software dependencies with known vulnerabilities.

A reverse-engineering effort puts FakePump user safety at risk. It reveals details about the iOS app and the pump and CDN, such as tokens, passwords, keys and authentication methods. An attacker may use this to their advantage to spoof or tamper with data and commands.

Even when we assume the iPhone to be unmodified, cybersecurity risks related to software implementation are manifold. For instance, if the FakePump iOS app implements any of the following, it directly impacts patient safety and privacy:

The iOS app stores configuration data such as user credentials using UserDefaults.

- Sensitive data must never be stored using UserDefaults. Encryption is not enabled, and other applications may access the data even if application sandboxing is enabled (e.g., using app extensions).

- Instead, the Keychain Services API should be used to encrypt and store sensitive data.

The iOS app copies sensitive data, including PHI and PII, to the UIPasteboard (also called clipboard).

- Sensitive data must never be copied to the UIPasteboard because it is accessible to all iOS apps.

The iOS app does not protect against screenshots when PHI is displayed.

- Protecting against screenshots is a unique requirement that is only really been explored by apps like Snapchat. Currently, the iOS application can receive a notification when a user takes a screenshot. At a minimum, the iOS app should log the action.

The iOS app does not use Certificate Transparency (CT) when establishing TLS connections.

- Apple prescribes that all certificates issued after October 15, 2018, must meet its CT policy.

It should be noted that the cybersecurity risks presented in this paper were encountered in the course of Harbor Labs’ medical security analysis consulting, and were present in actual medical device architectures that included smart devices. Presenting these risks through a fictitious medical device is intended to illustrate the breadth of the patient safety and privacy issues that can be present in such architectures so that they may be avoided in future deployments.